Adaptive Speech Quality Aware Complex Neural Network for Acoustic Echo Cancellation with Supervised Contrastive Learning

Publication: https://arxiv.org/abs/2210.16791v3

Demo

- Input Recording:

- Processed Output

Abstract

This paper proposes an adaptive speech quality complex neural network for acoustic echo cancellation (AEC) in a multi-stage framework. The proposed model comprises a modularized neural network that focuses on feature extraction, acoustic separation, and mask optimization. To enhance performance, we adopt a contrastive learning framework during the pre-training stage, followed by fine-tuning using a complex neural network with novel speech quality aware loss functions. The proposed model is trained using 72 hours for pre-training and 72 hours for fine-tuning. Experimental results demonstrate that the proposed model outperforms the state-of-the-art methods. Our approach provides a novel framework for AEC by leveraging the benefits of both the contrastive learning framework and the adaptive speech quality aware complex neural network.

1 Problem Formulation

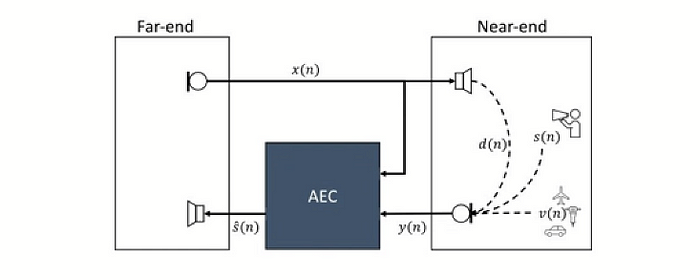

We illustrate the signal model of acoustic echo cancellation in the time domain below. The microphone signal y(n) can be formulated as:

where s(n), d(n), v(n) are near-end speech, acoustic echo and near-end background noise, respectively. n refers to time sample indexes. d(n) is obtained by the far-end signal x(n) passing through the transmission path h(n). Nonlinear distortion and system delay will be brought in during this process. The acoustic echo cancellation task is to separate s(n) apart from y(n), on the premise that x(n) is known.

2 Contrastive Learning Pre-training Framework

Contrastive learning has gained much attention recently in computer vision. It is a technique that enables the model to learn the difference between data pairs and extract better image representation by maximizing the distance of similar data pairs. In the speech domain, recent studies have shown that contrastive learning can be used to pretrain an encoder to help downstream tasks achieve better results. Motivated by these findings, we propose a contrastive learning pretraining framework for Acoustic Echo Cancellation (AEC) models to enhance their performance.

The proposed contrastive learning (CL) framework is shown in Figure 2 and consists of three parts: data pair generation, AEC complex neural network, and the CL loss function. In the data generation stage, we perform data augmentation to enable contrastive learning, creating an anchor audio and its corresponding positive and negative audio pairs. The positive audio pairs share the same near-end audio as the anchor audio, but have different far-end audio. The negative audio pairs are different from both the near-end and far-end audio of the anchor audio. By maximizing and minimizing the similarity between the positive and negative pairs, our model can learn to distinguish between near-end and far-end audio in the microphone signal. An additional CL score head controls the training processing of both AEC and CL. The anchor audio and contrastive audio share the same weight as the AEC model, with ${h_x}$ and ${h_x’}$ representing the features of the anchor and contrastive audio pair. The similarity between these features is calculated in the scoring head, with only the anchor feature used in the AEC head. Binary cross-entropy is used as as the loss function for the contrastive loss, which is expressed as follows:

where $sim(x,x^+)$ is the similarity score between the anchor feature and the positive feature at the frame level, and $sim(x,x^-)$ is the similarity score between the anchor feature and each negative feature.

3 AEC Fine-tuning Framework

As illustrated in Figure 3, our deep complex AEC network consists of three modules, namely, the feature extraction network, acoustic separation network, and mask optimization network. To incorporate both the phase and amplitude information of the signal, the input to the network is the stack of the complex form with real and imaginary parts of the microphone signal and far-end signals in the time-frequency domain. B, T, and 2N are representations of the dimensions of microphone and far-end signals, corresponding to batch size, number of audio frames, and the real and imaginary components of the audio signals after performing FFT transform.

3.1 Feature Extraction Network (FEN)

In FEN, a Convolutional Gated Recurrent Unit (CGRU) structure is utilized for extracting shallow feature representations of the signal. Specifically, the CGRU module comprises a one-dimensional convolution layer (Conv1D) and one GRU layer. The CGRU structure is designed to capture the characteristic information of the signal, while the Conv1D layer is responsible for capturing local contextual information. By adding a residual connection that concatenates the input with the output of the CGRU module, we obtain an extracted feature mask that is able to preserve the input signal information. The skip connection is shown to be advantageous in helping the CGRU module better understand the characteristic information of the signal.

3.2 Acoustic Separation Network (ASN)

ASN is designed to extract the near-end speech signal by applying two GRU layers on the input estimated feature mask, resulting in an output estimated near-end speech mask with half of the dimension size on the last dimension axis.

3.3 Mask Optimization Network (MON)

In MON, both the estimated near-end speech mask and the original microphone input signal are concatenated as inputs to generate a better near-end speech mask. By leveraging the microphone signal information, MON can differentiate between the desirable and undesirable signal components and therefore achieve more efficient suppression of the acoustic echo. The output of MON, estimated near-end remask, is obtained by adding its input, thereby enhancing the performance in complex acoustic scenarios. This approach allows the network to effectively leverage temporal dependencies in the input signal and gradually refine the output estimate.

4 Loss Function Design

4.1 Mask Mean Square Error (MaskMSE) Loss

Both the acoustic separation network and mask optimization network are the process to obtain estimated near-end speech masks, $\hat{M}_{complex}$. Then, the near-end speech can be obtained by multiplying the estimated mask and the microphone signal. We can generate true masks ${M}_{complex}$ by the given true label near-end speech $S(n)$ and microphone signal input $Y(n)$.

The complex mask loss $\mathcal{L}_{M}$ is defined as the mean square error to compare the difference between the estimated complex mask, $\hat{M}_{complex}$ and true mask, ${M}_{complex}$.

4.2 Error Reduction Loss

The error reduction loss function ${L}_{error}$ can be introduced to increase the performance of the model. The error signal is defined as the difference between microphone signal input $Y(n)$ and true label near-end speech $S(n)$. Ideally, the same estimated complex mask should reduce all the error signals to zero. Such error is minimized by the multiplication between the modulus of the complex estimated mask and the modulus of the error signal.

4.3 Speech Distortion Compensation Weighted (SDW) Loss

Speech distortion compensation weight is an adaptive speech quality aware weight to introduce the non-linear weight on MaskMSE. At certain frequency bins, when true mask ${M}_{complex}$ is larger than 1, it means that clean speech dominates over noisy speech. At such frequency bins, SDW can compensate for speech distortions of estimated speech by applying a weighted power function on MaskMSE.

4.4 Echo Suppression Compensation Weighted (ESW) Loss

Echo suppression compensation weight is an adaptive speech quality aware weight to introduce the non-linear weight on MaskMSE. At certain frequency bins, when true mask ${M}_{complex}$ is less than 1, it means that the noisy speech dominates over clean speech. At such frequency bins, the speech signal should be suppressed. ESW can suppress echoes of estimated speech by applying a weighted power function on MaskMSE.

The total loss is calculated as the weighted sum of the AEC loss and contrastive loss, as follows:

where $L_{AEC} = L_M + L_E+L_{SDW}+ L_{ESW}$, $\alpha$ is the weight parameter that controls the contribution of the contrastive loss, set to 1 in the CL Pre-training stage and 0 in the AEC Fine-tuning stage.

5 Experiments

5.1 Dataset

The proposed AEC system was trained and validated on a dataset consisting of 90 hours of audio, of which 72 hours were used for training and the remaining 18 hours for validation. The clean speech used for generating the far-end and near-end audio was obtained from multilingual data provided by Reddy et al. \cite{reddy2021icassp}. The dataset comprises speech in French, German, Italian, Mandarin, English and others. To further enhance the quality of the audio, the DTLN model \cite{westhausen2020dual} was used to remove possible noise before generating the far-end and near-end audio.

To create the echo, far-end and near-end signals, we follow exactly the same data process as \cite{dtln_aec} and keep 20\% of the near-end audio discarded to create the near-end only scenario.

All signals were normalized to (-25, 0) dB and segmented into 4-second segments before being passed into the network. The proposed dataset provides a diverse range of acoustic environments and enables the evaluation of the AEC system under different challenging conditions.

5.2 Experiment Set-up

The input of our architecture, denoted as $y(n)$, is sampled at a rate of 16 kHz and taken in frames of 512 points (32 ms) with 8 ms overlapping consecutive frames. After the Fast Fourier transform (FFT), this leads to a 513-dimensional spectral feature in each frame, considering both real and imaginary values. The total input features are 1026, including both microphone signals and far-end (reference) signals. The overall neural network architecture consists of three components, namely FEN, ASN, and MON. The network layers are a combination of Conv1D with a filter number of 128 and GRU with a number of units of 512.

To train the proposed AEC system, a two-stage training strategy is employed. In the pre-train stage, both the contrastive loss and AEC loss are used with an initial learning rate of 0.001. In the fine-tuning stage, the CL framework is removed and only the AEC loss is employed with an initial learning rate of $10^{-5}$. The ReduceLROnPlateau learning rate scheduler in Tensorflow is used to monitor the validation loss, and if the model is not improved in 5 epochs, the learning rate is reduced by a factor of 0.1 until it reaches 1e-10. The model is trained with the Adam optimizer \cite{kingma2014adam}.

To evaluate the performance of the proposed AEC system and compare it with the state-of-the-art approaches, DTLN \cite{dtln_aec} and the ICASSP-2022 Microsoft competition baseline \cite{icassp_2022_aec} are used as baselines and denoted as baseline in Table 1.

6 Results and Discussion

The performance of different methods under different noise levels, SNR, is summarized in Table 1 in terms of Perceptual Evaluation of Speech Quality (PESQ) \cite{pesq} and Extended Short-Time Objective Intelligibility (ESTOI) \cite{ETSOI}. PESQ and ESTOI evaluate the speech quality and intelligibility of the enhanced speech, respectively. Two types of noise, room noise, and music noise, are added for evaluation.

Our pre-training and fine-tuning AEC model outperform DTLN \cite{dtln_aec} and ICASSP-2022 Microsoft competition baseline \cite{icassp_2022_aec} under various noise levels. For example, under 30 dB input noise level, the pre-training model can achieve 3.204 PESQ values in the room noise compared to DTLN’s 2.775 and the baseline model’s 3.0275. In the fine-tuning stage, the performance can be further improved to 3.4624 PESQ values in the room noise and 3.5112 in the music noise under 30 dB noise of inputs. Additionally, the model has been evaluated using the latest evaluation metric, Perceptual Objective Listening Quality Assessment (POLQA), which provides a new measurement standard for predicting Mean Opinion Scores (MOS) and outperforms the older PESQ standard. Our best model achieved an average of 3.84 POLQA values under different input noise levels, outperforming the state-of-the-art result (3.22 POLQA).

Figure \ref{fig:before and after spectrum } visualizes the benefit of the adaptive speech quality aware method. It shows the difference in the spectrum of an example of enhanced audio before and after using SDW and ESW. By applying them, the model can remove residual echo while maintaining speech quality by compensating for distortion in both low and high-frequency bands.

7 Conclusion

The paper presents a novel approach to speech enhancement and echo cancellation through the use of an adaptive speech quality aware complex neural network with a supervised contrastive learning framework. The proposed contrastive learning pre-training framework for AEC models enables the model to learn the difference between data pairs and extract better audio representations, resulting in improved performance in downstream tasks.The proposed AEC fine-tuning framework consists of three neural network blocks, FEN, ASN, and MON, each specifically designed to target different tasks of AEC. The adaptive speech quality aware loss function results in significant benefits to remove speech echoes and improve speech quality.The experiments show that the proposed framework outperforms the state-of-the-art results in terms of average PESQ and ESTOI values under different noise levels.