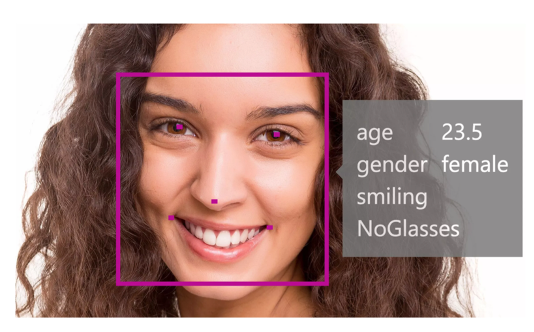

CelebA Facial Attribute Recognition Challenge

How to deal with domain gap and imbalanced data in a large dataset.

GitHub Repository: https://github.com/bozliu/CelebA-Challenge

1 Challenge Description

The goal of this challenge is to identify 40 different attribute labels depicted in a facial photograph. The data for this task comes from the CelebA dataset [1], which contains 200 thousand images belonging to 40 attribute labels.Specifically, the challenge data for this course consists of 160,000 images for training, 20,000 images for validation and 20,000 images for testing. The images has been pre-cropped and aligned to make the data more manageable.

2 The Problem of Domain Gap and Imbalanced Data

The model was trained by using Resnet 50 with cross-entropy classification. The training label frequency and accuracy of each attribute on test set are shown in Figure 2. It can be seen from the figure that the data has a long trail distribution. The average accuracy does not correlate with the long tail. The variance of accuracy among different attributes is relatively high. This imbalanced distribution is caused by the discrepancy between easy classes and hard classes. The top 10 easy classes and top 10 hard classes are shown in Figure 3 and 4. Easy class refers to salient attributes that are easy to learn such as “blond hair” and “bald”. On the other hand, hard classes refer to classes that are difficult to predict. Hard classes such as pale skin do not have many positive samples or enough data. If we want to train this well, aggressive oversampling of positive samples is required. Inspired by Professor Chen Change’s coursework, CelebA Facial Attribute Recognition Challenge, there are mainly three different approaches to solve the domain gap problem.

3 Approaches

3.1 Aggressive Data Augmentation

3.1.1 Random Resized Crop

torchvision.transforms.RandomResizedCrop()“transforms.RandomResizedCrop” in PyTorch could be applied. The given image is randomly cropped to different sizes and aspect ratios, and then the cropped image is scaled to the specified size. To be specific, the size after random clipping is set to (256, 256) and the scale is set to (0.5,1.0). The meaning of this operation is to obtain a randomly cropped image as a part of the object which it is also considered to be the same category of object.

3.1.2 Random Erasing

torchvision.transforms.RandomErasing()“RandomErasing” in PyTorch is to occlude a part of the image.

3.2 Data Samplers

3.2.1 Uniform Sampler

The input data for the conventional learning branch comes from a uniform sampler, where each sample in the training dataset is sampled only once with equal probability in a training epoch. The uniform sampler retains the characteristics of original distributions, and therefore benefits the representation learning.

3.2.2 Balanced Sampler

The purpose of resampling is to adjust and balance the number of the two types of data so that the less becomes more and the more becomes less so that the maximum accuracy can still be taken as the optimization goal when training the model.

For clarity, the uniform sampler maintains the original long-tailed distribution. The balanced sampler assigns the same sampling possibility to all classes and constructs a mini-batch training data obeying a balanced label distribution.

3.3 Adjusting the loss function

The purpose of adjusting the loss function itself is to make the model more sensitive to a small number of samples. The ultimate goal of training any deep learning model is to minimize the loss function. If we can increase the loss of misjudged minority samples in the loss function, then the model will be able to identify minority samples better.

A common way to adjust the loss function is to penalize the misjudgment of a few classes and adopt a cost-sensitive loss function. In the process of training, the penalty term is a common means of regularization. When facing imbalanced data, we can use the same idea to add a penalty coefficient to the misjudged minority samples and add it to the loss function. In this way, in the training process, the model will naturally be more sensitive to a small number of samples.

One possible improvement is to use focal loss [2], shown in Equation (1) to replace the current loss function, cross-entropy loss shown in Equation (2) in the original baseline. With regard to focus loss, it can not only solve the problem of sample imbalance but also pay more attention to the samples that are difficult to classify. Mathematically, the term (1−pᵢ)ᵞ reduces the contribution of dominant majority samples since pᵢ →1 and for the rest of the minority samples, the contribution is still significant since is far from 1.0.

Appendix: Useful Code Resource

[1] Uniform and balanced sampler on CIFAR dataset, which refers to the paper

BBN: Bilateral-Branch Network with Cumulative Learning for Long-Tailed Visual Recognition.

https://github.com/Megvii-Nanjing/BBN/blob/master/lib/dataset/imbalance_cifar.py

[2] Uniform and balanced sampler on CelebA dataset.

https://github.com/NIRVANALAN/face-attribute-prediction/blob/master/celeba.py

[3] Focal loss PyTorch implementation.

https://github.com/bozliu/CelebA-Challenge/blob/main/focal_loss.py